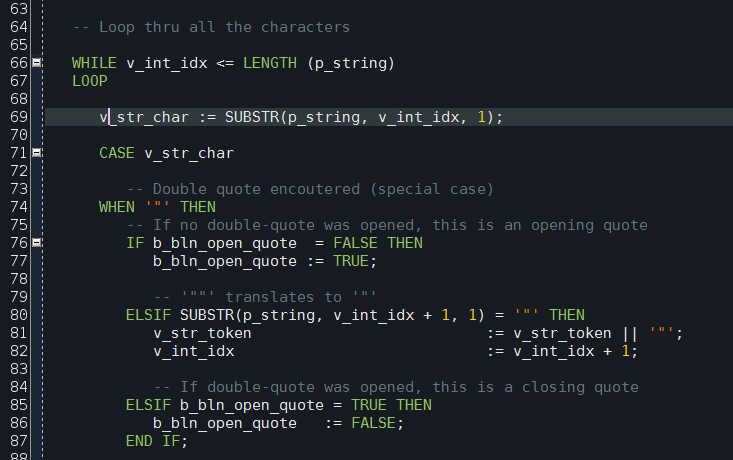

Here is a small script which shows information about running Oracle sessions. You can use commented lines to filter by an Oracle instance (in case you have a RAC), OS user, session ID, process ID or an application name.

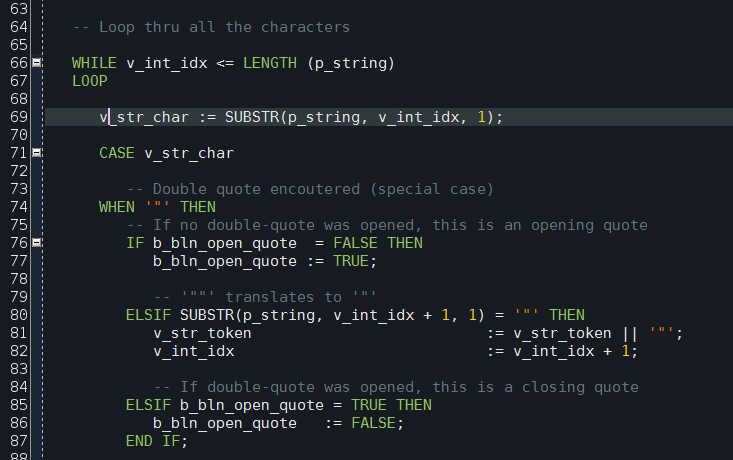

This is a spinoff of Obsidian colour scheme for Oracle SQL Developer. It is based on Obsidian Eclipse colour scheme by Morinar.

Unfortunately Oracle doesn’t make it easy to import a new colour scheme into SQL Developer, thus a little bit of hacking is required.

-

Close SQL Developer. This is important. If you modify the scheme file while SQL Developer is open, your changes won’t be saved.

-

Locate file dtcache.xml in the SQL Developer’s settings directory. On my system it is located in directory C:\Users\sergey\AppData\Roaming\SQL Developer\system4.0.3.16.84\o.ide.12.1.3.2.41.140908.1359

-

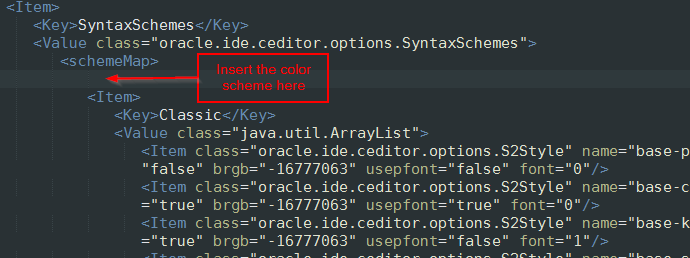

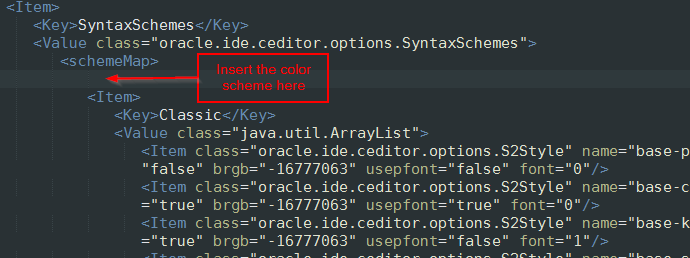

Locate <schemeMap> tag inside dtcache.xml file. Insert the content of ozbsidian-scheme.xml file inside <schemeMap> alongside the other colour schemes. Be careful not to break the XML.

-

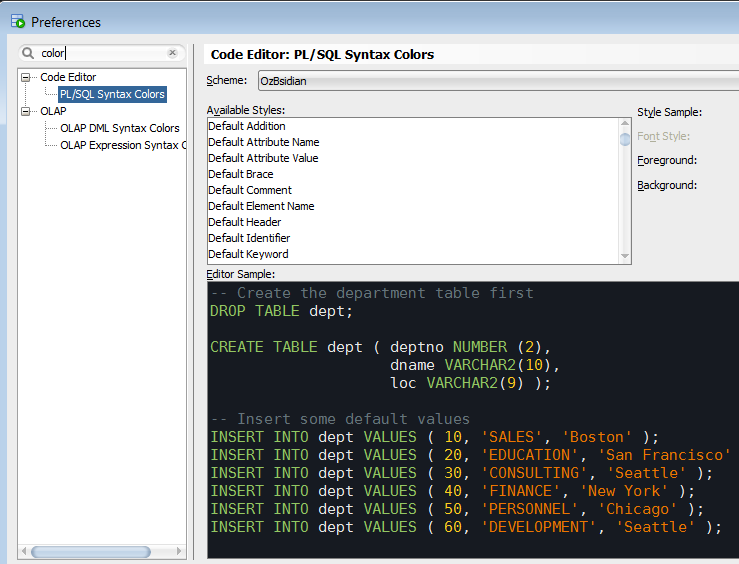

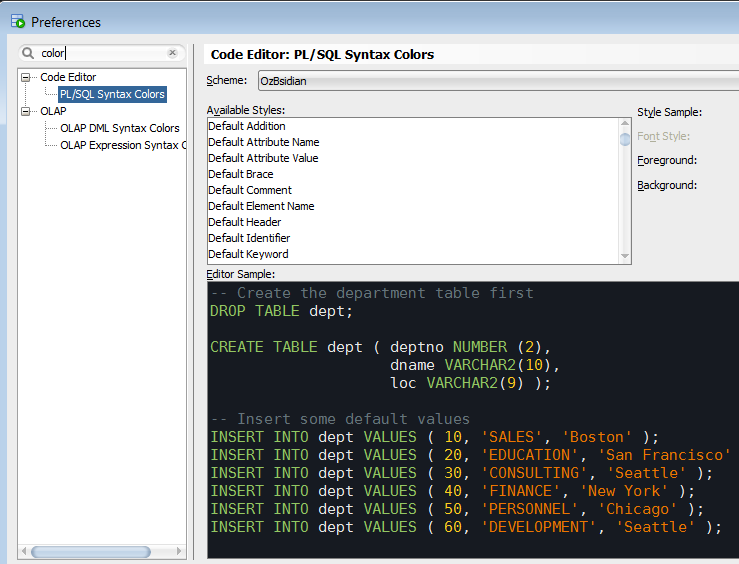

Launch SQL Developer. Navigate to menu Tools->Preferences, then select item Code Editor -> PL/SQL Syntax Colours in the left pane.

-

Select “OzBsidian” in the “Scheme” drop down list on the top.

The colours are mostly match Obsidian scheme, although not exactly. For instance, the background is a bit darker. Hence the name is OzBsidian to differentiate it from the original scheme.

You can download the colour scheme with the installation instruction from my GitHub account.

Enjoy!

If you ever wanted to know how what’s taking space in an Oracle database, or how large is the table you’re working on, here’s a script which answers these questions. It shows the size of all the database objects large than 10 Mb in a particular database schema.

The following columns are returned:

- Owner schema.

- Object name and type (INDEX, TABLE, etc.).

- Name of the table this object is associated with. E.g. indexes are associated with their parent tables.

- Database space occupied by the object in megabytes.

- Tablespace this object is in.

- Number of extents allocated for the object.

- Size of the initial extent in bytes.

- Total database size occupied by the parent table. E.g. for indexes it will be the size of the parent * table plus sizes of all the indexes on that table.

This script is based on the Stackoverflow.com discussion.

A few days ago I installed Oracle Linux in an Oracle VirtualBox VM. Once it was installed I found that eth0 interface wasn’t starting upon boot.

That was unusual. I am myself a Linux enthusiast, and I regularly download and install lots of distributions, and, by and large, network works in them out of the box.

Today I finally got around to troubleshooting this problem. It took me a couple of hours digging through the scripts, setting trace points, and reading logs; and here’s what I found.

For the reference, this was my configuration:

- Oracle VM VirtualBox 4.1.22 r80657

- Host: Windows 7 64-bit

- Linux: Oracle Linux Server release 6.3

- Kernel 2.6.39-200.24.1.el6uek.x86_64 on x86_64

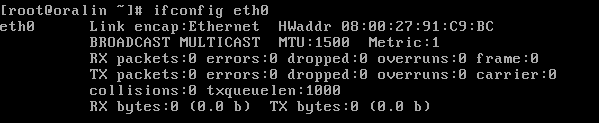

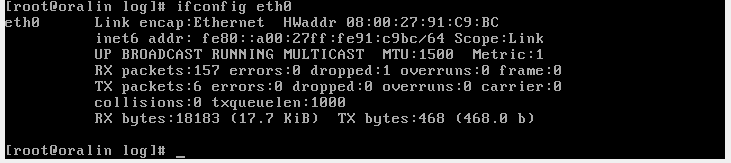

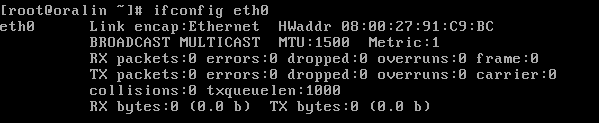

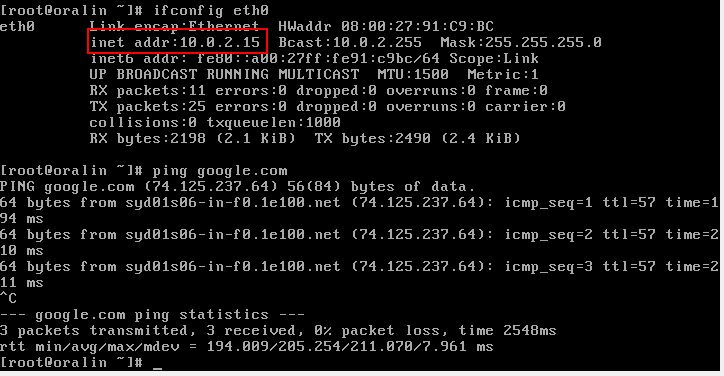

As I said, upon boot eth0 was down. If I tried to bring it up with ifconfig eth0 up it came up in IPV6 mode only, no IPV4:

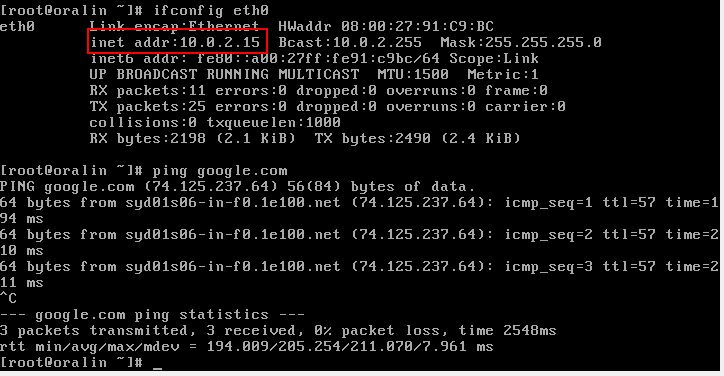

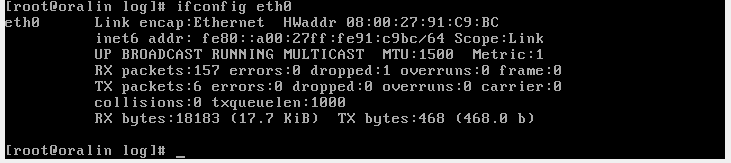

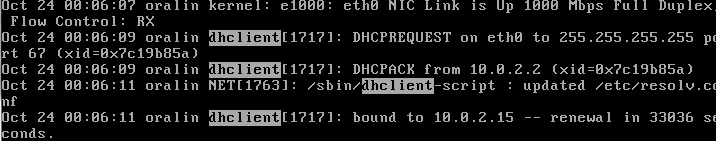

Hm, weird. What was even more weird was that if I brought it up with ifup eth0 instead of ifconfig eth0 up, IPV4 network started succesfully:

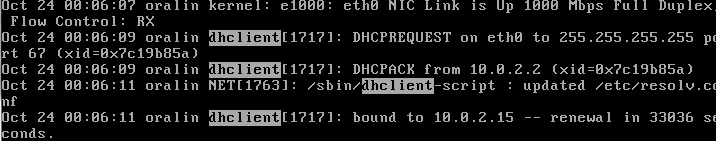

Obviously, these 2 were are different. The reason ifup worked was because it called dhclient to obtain an IP address from a DHCP server

Whereas ifconfig didn’t make that call. And because the network interface did not have an IP address, it stayed down.

So, what is the difference between ifup and ifconfig up?

Well, ifup is actually a script located in /etc/sysconfig/network-scripts.

During the system boot the network subsystem is brought up via startup

script /etc/rc2.d/S10network. That script goes through all the network interfaces it can find and

brings them up during the boot. What’s interesting, I found that it uses a set of configuration files

/etc/sysconfig/network-scripts/ifcfg-*<interface_name></interface_name>* to determine if a particular interface needs to be brought up during the boot time. There is one config file for each interface. I’m not sure

when they are created, maybe at install time.

In my case I found that one of the parameters in ifcfg-eth0 file was ONBOOT=no.

Turned out the network startup script uses that parameter to determine if the particular interface should be brought up at the boot time.

So, I changed it to ONBOOT=yes and everything worked.

Now when the system starts, eth0 is up and running.

Problem solved!

Update:

So, the mystery is finally solved. There is “Connect automatically” checkbox on the installer’s network configuration screen (item 10 here).

If this checkbox is unchecked, network interfaces do not come up at system start-up.

Many thanks to the Oracle Linix team for helping me in this investigation.

All database applications can be divided into 2 classes: OLTP and data

warehouses. OLTP stands for Online Transactions Processing. It’s easy to explain

the difference between them by an example.

Imagine yourself buying groceries at your local supermarket. When you

are done filling your shopping basket and finally come to checkout,

here’s what happens:

-

A shopping assistant at the checkout register picks up your first

item and scans its barcode. The computer built into the register

turns the barcode into a series of characters and digits and queries

the store’s database for the item’s description, price and picture.

Then it adds the item into your virtual shopping cart.

-

You reach for your next item and all of those steps happen again: a

few queries sent to the database, a few tables updated. And then

again and again, until your basket is empty.

-

You swipe your credit card. The shop’s system sends a request to the

credit card processing centre. It checks that you card is valid and

that you are not over your credit limit. Then it asks you for your

pin code, verifies it, and if all the checks are successful,

withdraws the money.

-

Then the groceries you bought are marked as sold in the inventory

control system. A receipt is printed, and the virtual shopping

basket is cleared. That’s it. Thank you for shopping with us.

It probably took you less than 5 minutes to go through the register. But

the number of database queries and updates it resulted in was likely in

hundreds. Now multiply that by the number of checkout registers in the

shop, and you’ll get the picture. Checkout register is a typical OLTP

system.

OLTP applications are characterized by large volume of atomic database

transactions: retrieve 1 row from a database table here, 3 there, insert

a few lines into a third table or update a row of yet another table.

Most of OLTP-type applications are interactive: you press a button and

expect the system to respond within a few seconds at most. That presents

a challenge if the application is a multi-user system with hundreds of

users working at the same time. It is not uncommon for an OLTP system to

crunch tens of thousands, even millions of database operations per

minute. And because of that these operations need to be extremely fast.

Therefore OLTP systems are specifically engineered for a low latency in

conditions of high volume of elementary database operations.

Now imagine that at the end of the financial year the COO of the

supermarket chain requires a detailed sales report. He wants to know

which products were selling faster comparing to the previous 10 years

with a breakdown by geographical areas. He also wants to know the

correlation between the increased prices and sales volumes aggregated by

product categories, and so on. Every business requires hundreds and

hundreds of such reports, and not just at the end of the financial year

but sometimes every day. Financial departments, sales, marketing, and

management – they all want to know how the business is doing. In fact,

there is a special buzzword for that kind of reporting – “Business

Intelligence”. Another buzzword “Data mining” means “Looking for answers

for which we don’t know questions yet”. Data mining looks for trends

within the data and tries to find correlations to the known parameters.

An example of a data mining query is “Find out if the sales volume

changed within the last year and what else is changed that could explain

that”.

Business Intelligence and Data Mining reports tend to be highly complex.

Most of times they require querying data across very large datasets,

such as all sales over the last 10 years, then aggregating it, then

calculating summaries, averages, correlations and so on. These are very

different types of operations from which OLTP systems are designed for.

And that is why running them against OLTP databases is usually not a

good idea. This is what data warehouses are for.

Data warehouses are database systems optimised for complex database

queries over large data sets. They are predominantly used for Business

Intelligence and Data Mining reporting. Within large corporate data

warehouses it is common to have “sections” designed for specific

purpose, for example for particular types of financial reports. These

sections along with their business intelligence logic are called

Datamarts.

Data warehouses usually source data from multiple systems. For example,

sales data, financial data, inventory, vendors, etc. may come from

different geographically distributed platforms which otherwise don’t

talk to each other. Having all this information in one data warehouse

enables business users to get reports such as profits on sales of

certain goods per supplier.